A/B split testing is a method used to compare two versions of a webpage to determine which one performs better. It involves creating two variations (A and B) of the same element and randomly dividing the audience into groups to see which version yields better results.

In A/B split testing, the two versions of the webpage (A and B) are typically identical except for one element that is being tested. This element could be a headline, button, layout, image, or any other element of the webpage. By measuring performance metrics such as click-through rates, conversion rates, or engagement levels, the effectiveness of the different variations can be assessed, and the version that performs better can be identified.

A/B split testing helps in making data-driven decisions and optimizing the performance of webpages by identifying which version generates higher user engagement or achieves the desired goals. It is commonly used in website optimization, email marketing, online advertising, and user experience (UX) research.

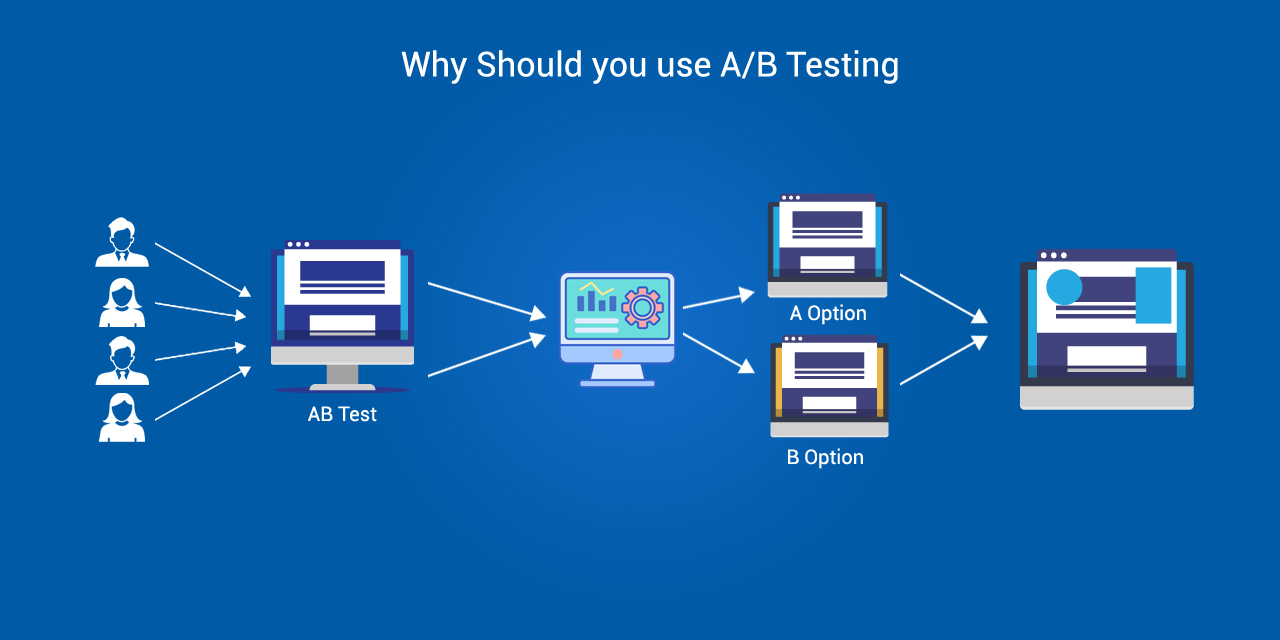

Why should you use A/B Testing?

A/B testing is a powerful tool that helps optimize marketing efforts, enhance user experience, and make data-driven decisions to achieve better results and improve business outcomes. A/B testing is valuable for the following reasons:

- Data-Driven Decision: A/B testing provides objective data and insights that help make informed decisions based on actual user behavior rather than relying on assumptions or personal preferences.

- Optimization and Improvement: A/B testing continuously optimizes and improves landing pages, emails, or other marketing elements by identifying the most effective variations that lead to higher conversion rates, engagement, or desired outcomes.

- User Experience Enhancement: A/B testing helps enhance the user experience by identifying changes that positively impact user engagement and usability.

- Reduced Risk: By testing variations before implementing them widely, A/B testing helps mitigate the risks associated with making significant changes that could negatively impact your website.

- Cost-Effectiveness: A/B testing can help optimize marketing campaigns, Ad creatives, or website elements, resulting in higher conversion rates or improved ROI, which can be achieved without significantly increasing advertising budgets.

- Continuous Learning: A/B testing allows you to gather insights about your audience's preferences, behaviors, and preferences over time, enabling you to refine and improve your marketing strategies and tactics.

- Competitor Analysis: A/B testing can also be used to compare your performance against competitors by testing different approaches and measuring their impact.

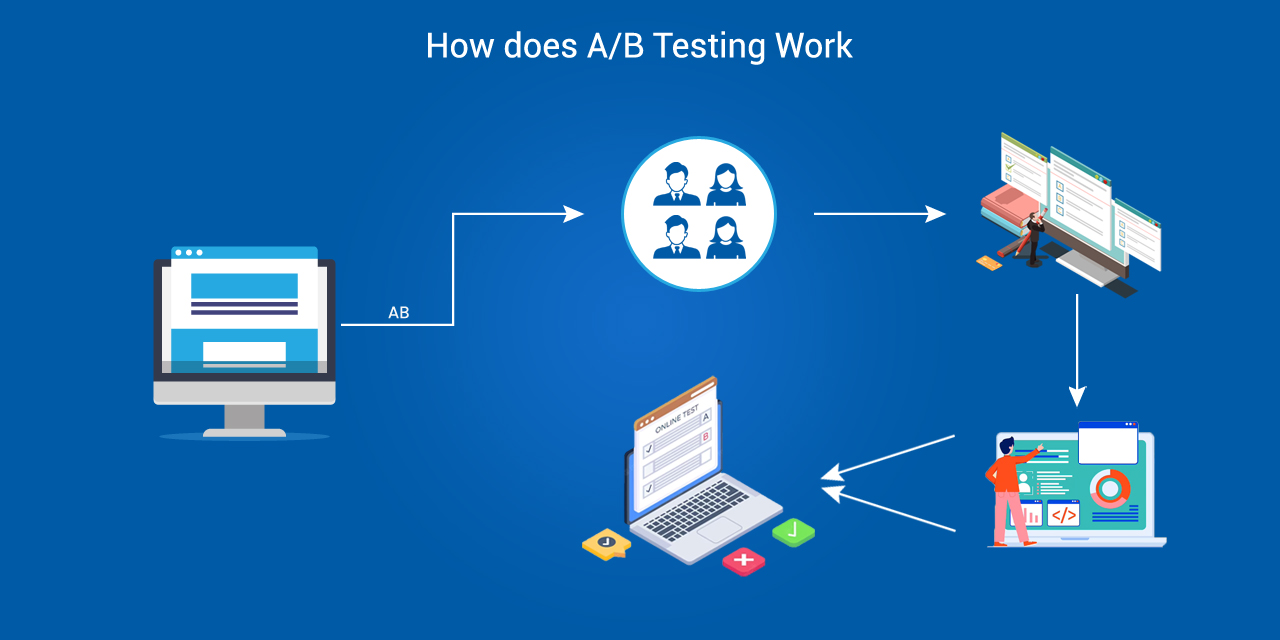

How does A/B Testing work?

A/B testing works by comparing two or more variations of a specific element or variable to determine which version performs better in terms of achieving a desired outcome. Here's how it typically works:

- User Segmentation: Your audience is divided into segments, and each segment is randomly assigned to one of the variations being tested. This helps ensure that the results are not biased by factors such as user demographics or behavior.

- Variation Views: Users in each segment are presented with one specific variation of the element being tested. For example, 50% of the users will see version A, while the rest will see version B.

- User Interaction and Data Collection: Users interact with the webpage, email, or marketing material, and their behavior is tracked and recorded. Key metrics, such as click-through rates, conversion rates, or engagement levels, are collected for each variation.

- Statistical Analysis: The collected data is subjected to statistical analysis to determine if the observed differences in performance between the variations are statistically significant. This analysis helps identify whether one version outperforms the others.

- Result Interpretation: Based on the statistical analysis, conclusions are drawn about the performance of each variation. The version that performs better in achieving the desired outcome is typically considered the winner.

- Implementation and Iteration: If a statistically significant difference is observed, the winning version is implemented as the default version. Further iterations and tests can be conducted to continually refine and optimize the element being tested.

The process of A/B testing allows you to make data-driven decisions by objectively comparing different variations and determining which one yields the best results. It helps identify the elements or strategies that lead to improved user engagement, conversion rates, or other desired outcomes, ultimately leading to better website performance, marketing effectiveness, and user experience.

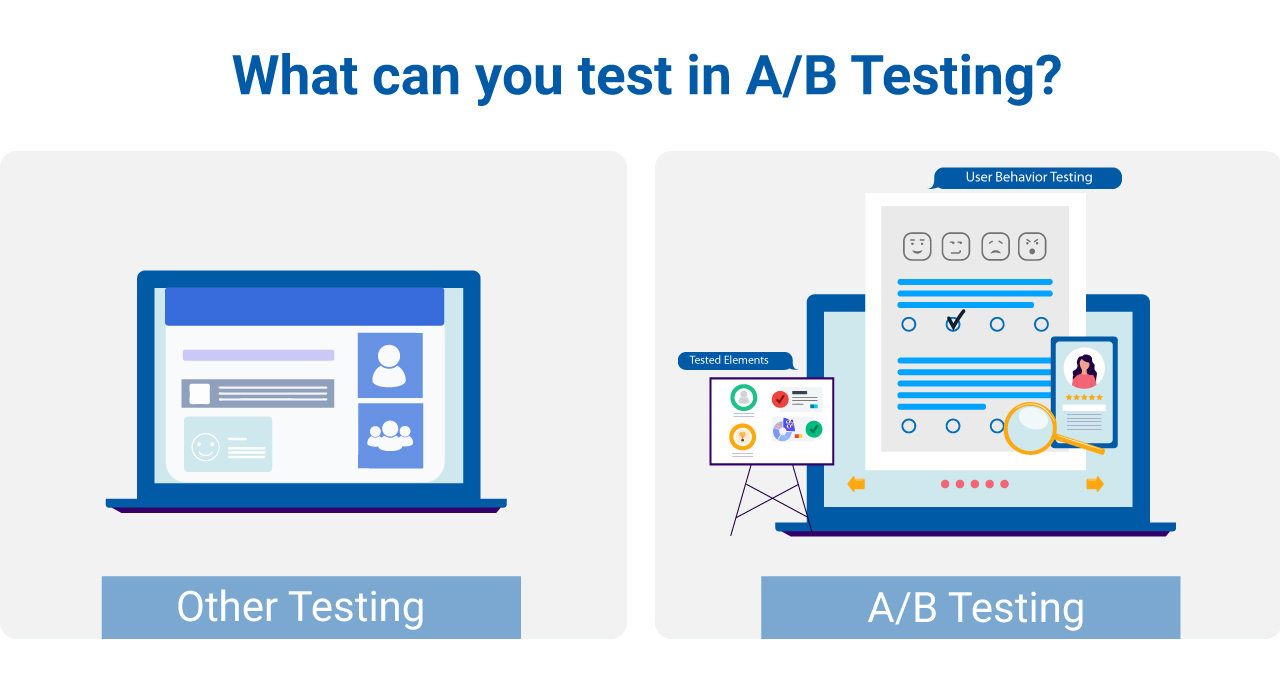

What can you test in A/B Testing?

In A/B testing, you can test various elements and variables to determine their impact on user behavior and outcomes. Here are some commonly tested elements:

- Headlines and Titles: Test different variations of headlines or titles to see which ones generate higher click-through rates or engagement.

- Call-to-Action (CTA) Buttons: Experiment with different button colors, sizes, shapes, wording, or placement to determine the most effective version for encouraging desired actions.

- Layout and Design: Test different layouts, designs, or visual elements to assess their impact on user engagement, readability, or conversion rates.

- Images: Compare the performance of different images to identify the most compelling visual content that resonates with your audience.

- Content Length: Test variations in content length (short vs. long) to understand how it affects user engagement, time on page, or conversion rates.

- Forms and Fields: Experiment with different form layouts, input fields, or form lengths to optimize conversion rates or user completion.

- Pricing and Discounts: Test different pricing models, discount offers, or pricing displays to determine the most effective strategy for maximizing sales or conversions.

- Email Subject Lines: Test various subject lines to identify the ones that improve open rates and click-through rates in email marketing campaigns.

- Page Elements: Test different elements such as testimonials, social proof, trust badges, or reviews to assess their impact on user trust, credibility, and conversion rates.

- Navigation and Menus: Experiment with different navigation structures or menu designs to optimize user experience, ease of navigation, or time spent on the site.

The specific elements you choose to test should align with your goals, target audience, and the specific metrics you want to improve. It's important to test one element at a time to accurately measure its impact.

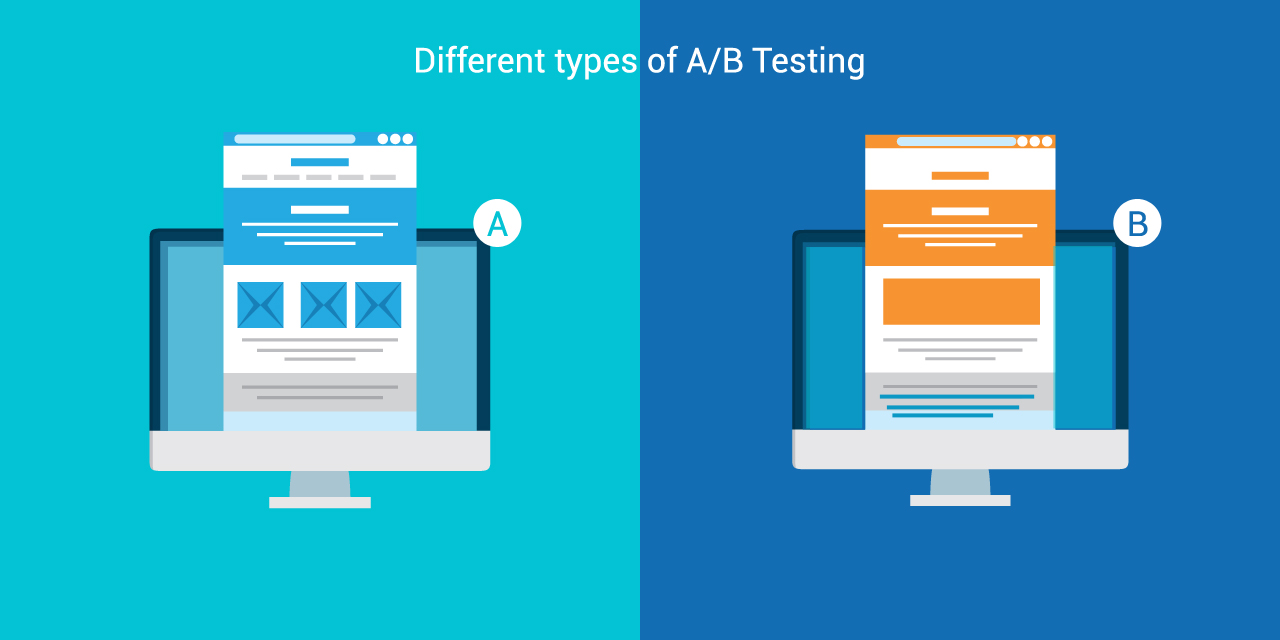

What are different types of A/B Testing?

There are several different types of A/B testing that you can employ depending on your goals and the elements you want to test. Here are some common types:

- A/B Testing: This is the standard type of A/B testing where you compare two variations, A and B, to determine which one performs better.

- Multivariate Testing: In multivariate testing, you test multiple variations of multiple elements simultaneously to understand how different combinations impact user behavior and outcomes. This allows you to assess the interaction effects among various elements.

- Split URL Testing: Split URL testing involves creating entirely different versions of a webpage and diverting a portion of your traffic to each version. This type of testing is useful when you want to compare different website designs, user flows, or major structural changes.

- A/B/n Testing: A/B/n testing is similar to A/B testing but involves testing more than two variations. It allows you to compare the performance of multiple variations and identify the most effective one.

- Redirect Testing: Redirect testing involves sending different segments of your audience to completely separate web pages. It is commonly used to test major changes or alternative designs that require a different user flow.

- Sequential Testing: In sequential testing, you test variations one after another in a series of iterations. This approach allows you to build upon the learnings from previous tests and gradually refine your elements or designs.

- Personalization Testing: Personalization testing involves tailoring content based on individual user characteristics. It allows you to test personalized variations to determine which personalized approach yields the best results.

The choice of the testing type depends on your specific goals, the complexity of the elements you want to test, and the resources available for conducting the tests. It's important to select the most suitable type of testing to gather meaningful insights and make informed decisions.

Conclusion

A/B testing is a method used to compare two or more variations of a specific element (or variable) to determine which one performs better in achieving a desired outcome. It involves dividing the audience into equal segments and randomly assigning each segment to a variation. User interactions and data are collected, and statistical analysis is applied to determine if there are statistically significant differences in performance between the variations. The winning version is implemented, and the process can be iterated to continuously optimize and improve the element being tested. A/B testing enables data-driven decision-making, enhances user experience, and improves desired outcomes by identifying effective strategies based on empirical evidence.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment