The demand for 3D content is exploding across industries, from game development and film to product design and e-commerce. However, traditional 3D model creation remains fragmented, requiring multiple specialized and complex software tools. This technical barrier has long stifled creativity and slowed production pipelines.

This is where a modern AI 3D model generator steps in to redefine the process. Instead of focusing solely on generation, today’s AI-native platforms unify the entire asset creation workflow, from concept to production-ready 3D models, within a single environment.

Solving the Fragmented 3D Creation Problem

For years, 3D content creation has been hampered by disjointed workflows. Artists and designers often juggle multiple tools—one for modeling, another for texturing, a separate application for rigging, and others for optimization. Constantly switching between software drains creative energy and shifts focus from innovation to technical management.

AI-driven 3D platforms aim to solve this problem by streamlining the entire process into a cohesive system. Rather than acting as standalone generators, these platforms integrate generation, editing, optimization, and export in a single workspace, guiding users through the full asset development lifecycle.

Most unified platforms now organize workflows around key functions such as:

- Batch Generation: Producing multiple 3D models from text or image inputs.

- Mesh Optimization / Low-Poly Workflows: Automatically refining geometry for real-time performance.

- Topology Controls: Offering tools to adjust mesh structure for various project needs.

- Segmented Generation: Creating models in editable parts for easier customization.

- Asset Libraries: Managing models and collections efficiently.

- Editable Properties: Adjusting transformations and materials with precision.

- Non-Destructive History: Tracking changes and enabling fluid experimentation.

How AI-Native Platforms Revolutionize the 3D Workflow: Core Features

Modern AI 3D model generators go far beyond simple mesh creation. Their value lies in integrating multiple tools into a single environment, thereby creating a more efficient and unified workflow. Below are core features commonly found in advanced platforms.

1. Text and Image to 3D Generation

At the foundation, AI generators transform ideas into 3D objects using text descriptions or reference images.

As text-to-3D generators, they convert prompts—such as “a miniature castle with moss-covered walls”—into textured models within seconds, enabling rapid concept exploration.

As image-to-3D generators, they construct geometry from one or multiple reference photos. Support for multi-view inputs helps produce models with more accurate proportions and avoids the flatness typical of single-image conversions.

These tools make 3D creation more accessible for beginners and significantly faster for professionals, with support for standard export formats such as GLB and FBX.

2. Intelligent Segmentation

Editing complex models manually is time-consuming. AI platforms increasingly include automated segmentation systems that analyze a model and break it into logical components, such as splitting a character into limbs or a vehicle into wheels, doors, and body sections.

This gives creators the ability to:

- Edit parts independently

- Generate modular variations, such as swapping accessories or components

- Streamline workflows for game development, prototyping, and 3D printing

Automated segmentation significantly reduces manual labor and improves efficiency across design tasks.

3. Smart Retopology

AI-generated geometry is often dense or unstructured, requiring cleanup before being used in production environments. AI-powered retopology tools automate this process by:

- Analyzing curvature and essential features

- Distributing polygons intelligently

- Maintaining clean edge flow around joints, faces, or mechanical seams

Users typically have the option to select quad- or triangle-based outputs, depending on whether the end goal is animation, hard-surface work, or real-time applications.

This ensures assets can render efficiently without sacrificing visual quality.

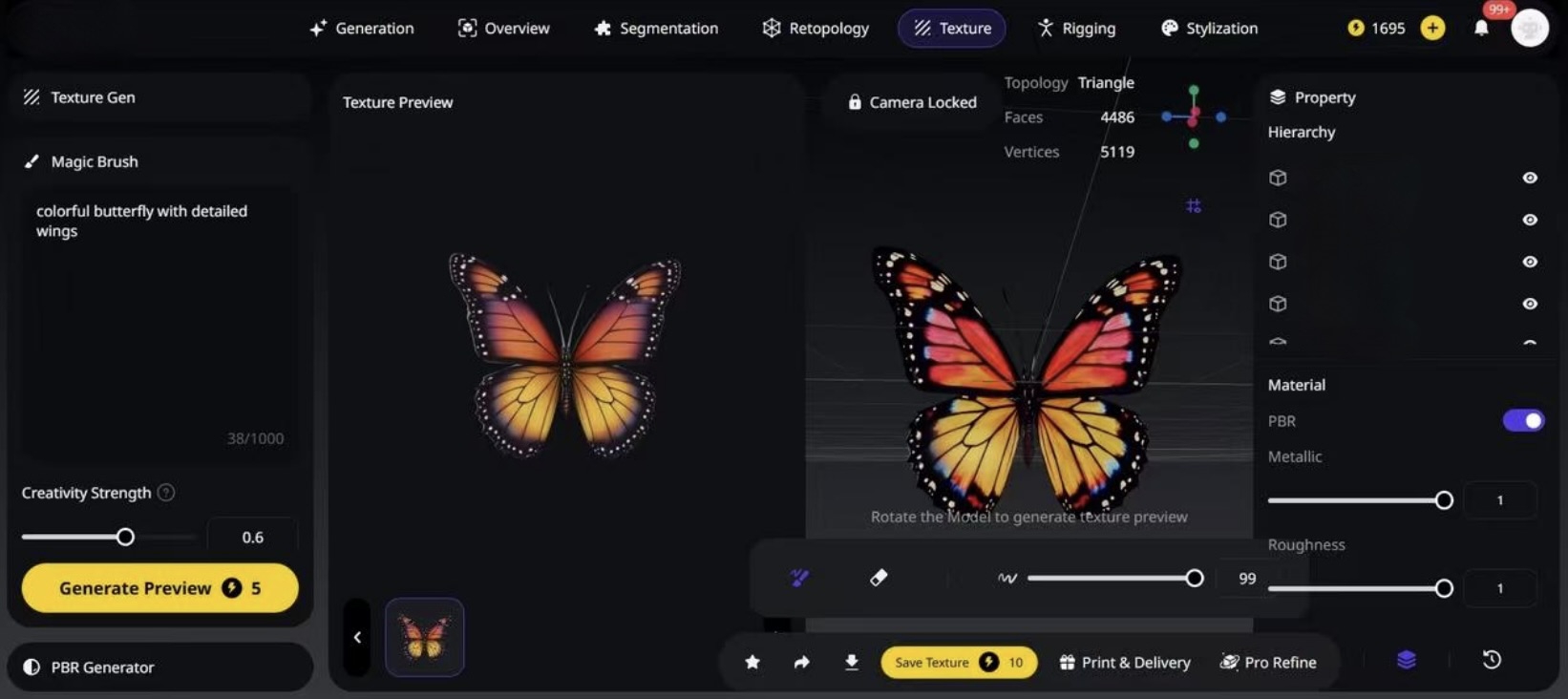

4. Advanced Texturing and AI-Assisted Painting

Texturing traditionally requires specialized skill and time, but AI-driven texturing systems now automate much of the process. Using descriptive text or reference images, these tools generate PBR-ready materials in seconds.

Some platforms include interactive refinement tools—such as AI-assisted brushes—that allow creators to:

- Fix or enhance textures

- Paint directly onto the surface of a model

- Maintain material consistency during edits

This blend of automation and hands-on control enables rapid iteration while preserving artistic flexibility.

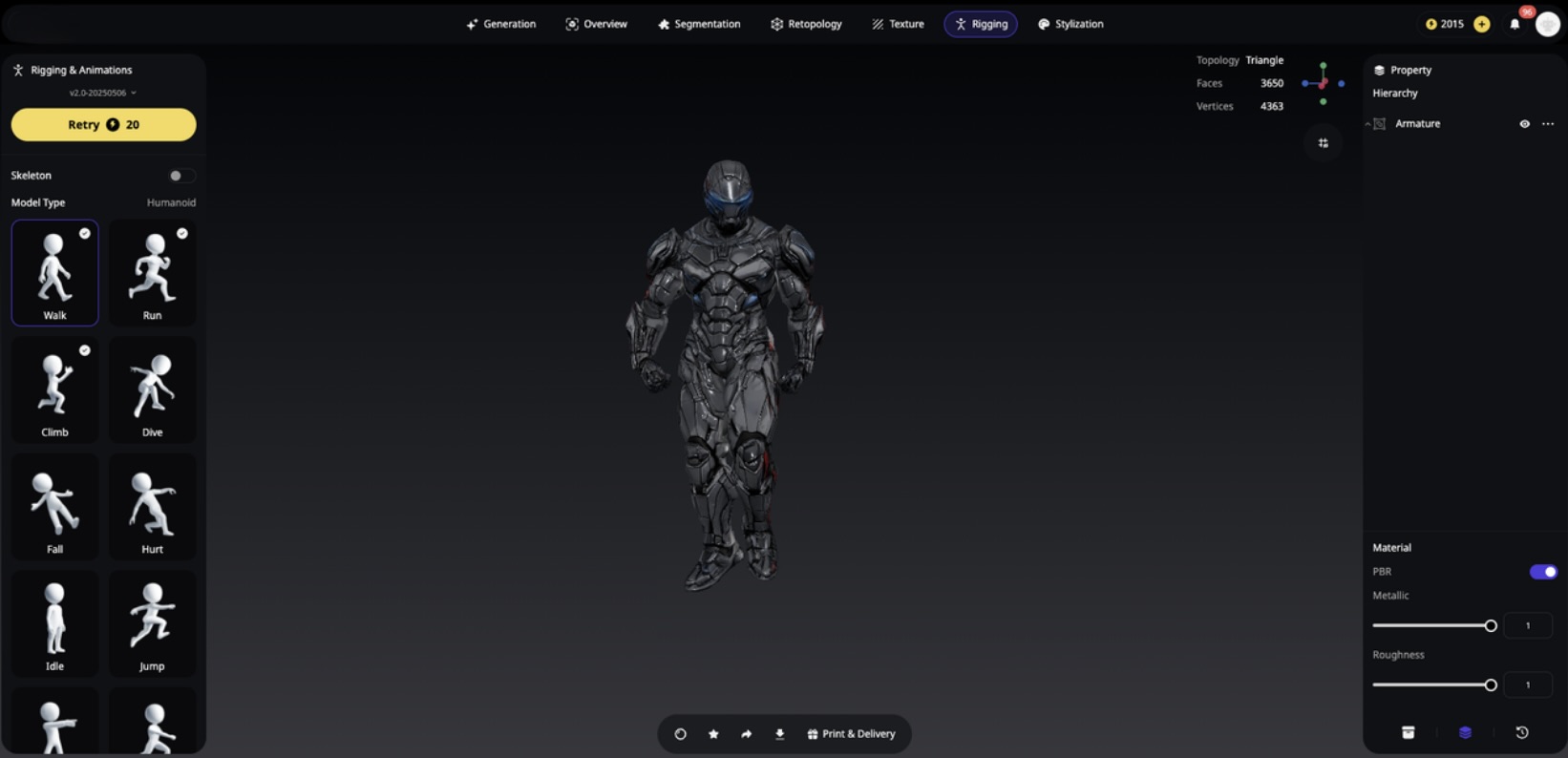

5. Semantic-Aware Automatic Rigging

Rigging, the process of preparing an animation model, is often challenging and technical. An AI-based auto-rigging system analyzes model structure—whether humanoid, quadruped, or mechanical—and applies:

- A suitable skeletal layout

- Accurate joint placement

- Automated skin-weight assignments

With this automation, creators can transform static models into animation-ready assets without manual rig construction.

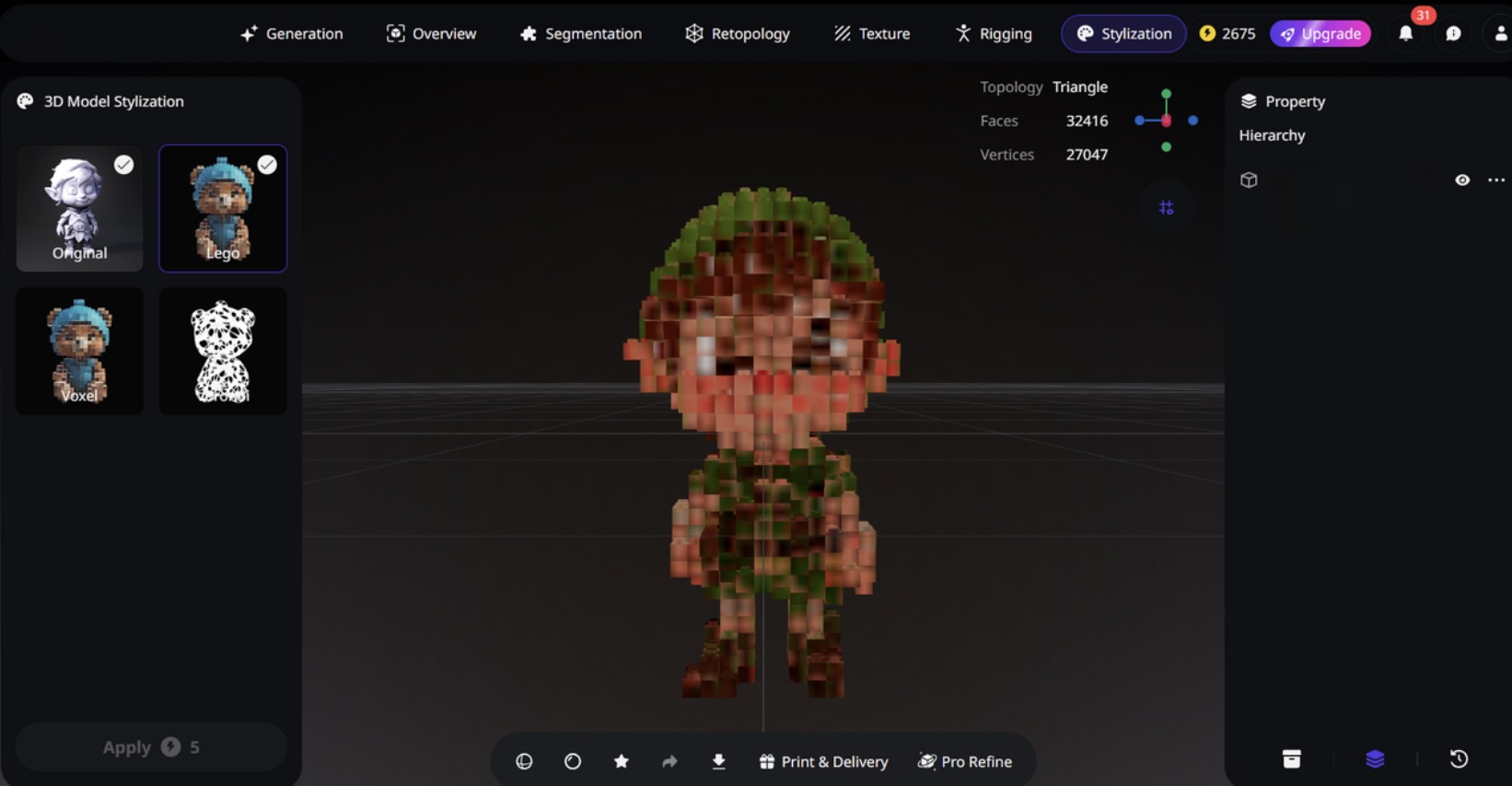

6. AI Model Stylization

Style transformation is another area where AI is reshaping workflows. Many platforms allow users to apply stylistic presets—such as voxel, toy-like, cel-shaded, or minimalist aesthetics—either during initial generation or as a post-processing step.

Key advantages include:

- Flexible creative exploration without manual remodeling

- Compatibility with rendering engines and pipelines

- Ability to generate asset variants for games, marketing, and visualization

This opens the door to diverse outputs from a single base model.

A Platform for Every Creator

AI-native 3D tools are now transforming workflows across various sectors:

- Indie game developers benefit from accelerated prototyping.

- Product designers iterate more quickly on new concepts.

- Architects bridge the gap between 2D drawings and immersive 3D visualization.

Many platforms offer a free tier for experimentation and professional tiers for high-volume production or commercial licensing, allowing creators at all levels to engage with unified AI workflows.

The Future of 3D Creation is Unified

AI-driven 3D creation tools represent a paradigm shift. Instead of relying on disconnected applications, creators can now work within integrated platforms that manage the entire technical pipeline. By unifying generation, segmentation, retopology, texturing, rigging, and styling, these tools free designers to focus more on creative exploration and less on technical overhead.

The future of 3D content lies not only in faster generation, but in more intelligent, more connected workflows. AI-native platforms are well-positioned to lead this shift as industries increasingly adopt automated and unified approaches to 3D production.

Conclusion

As AI continues to reshape digital production, unified 3D creation platforms offer a more streamlined, accessible, and efficient path from concept to final asset. By reducing technical burdens and consolidating essential tools, these systems support creators across industries and skill levels. Whether used for rapid prototyping, product visualization, or large-scale content pipelines, AI-driven 3D workflows signal a broader shift toward integrated, intelligent creation methods that will continue to evolve with the technology.

Featured Image generated by Google Gemini.

Share this post

Leave a comment

All comments are moderated. Spammy and bot submitted comments are deleted. Please submit the comments that are helpful to others, and we'll approve your comments. A comment that includes outbound link will only be approved if the content is relevant to the topic, and has some value to our readers.

Comments (0)

No comment